How politics makes us stupid

There’s a simple theory underlying much of American politics. It sits hopefully at the base of almost every speech, every op-ed, every article, and every panel discussion. It courses through the Constitution and is a constant in President Obama’s most stirring addresses. It’s what we might call the More Information Hypothesis: the belief that many of our most bitter political battles are mere misunderstandings. The cause of these misunderstandings? Too little information — be it about climate change, or taxes, or Iraq, or the budget deficit. If only the citizenry were more informed, the thinking goes, then there wouldn’t be all this fighting. It’s a seductive model. It suggests our fellow countrymen aren’t wrong so much as they’re misguided, or ignorant, or — most appealingly — misled by scoundrels from the other party. It holds that our debates are tractable and that the answers to our toughest problems aren’t very controversial at all. The theory is particularly prevalent in Washington, where partisans devote enormous amounts of energy to persuading each other that there’s really a right answer to the difficult questions in American politics — and that they have it. But the More Information Hypothesis isn’t just wrong. It’s backwards. Cutting-edge research shows that the more information partisans get, the deeper their disagreements become.

In April and May of 2013, Yale Law professor Dan Kahan — working with coauthors Ellen Peters, Erica Cantrell Dawson, and Paul Slovic — set out to test a question that continuously puzzles scientists: why isn’t good evidence more effective in resolving political debates? For instance, why doesn’t the mounting proof that climate change is a real threat persuade more skeptics?

The leading theory, Kahan and his coauthors wrote, is the Science Comprehension Thesis, which says the problem is that the public doesn’t know enough about science to judge the debate. It’s a version of the More Information Hypothesis: a smarter, better educated citizenry wouldn’t have all these problems reading the science and accepting its clear conclusion on climate change.

But Kahan and his team had an alternative hypothesis. Perhaps people aren’t held back by a lack of knowledge. After all, they don’t typically doubt the findings of oceanographers or the existence of other galaxies. Perhaps there are some kinds of debates where people don’t want to find the right answer so much as they want to win the argument. Perhaps humans reason for purposes other than finding the truth — purposes like increasing their standing in their community, or ensuring they don’t piss off the leaders of their tribe. If this hypothesis proved true, then a smarter, better-educated citizenry wouldn’t put an end to these disagreements. It would just mean the participants are better equipped to argue for their own side.

Kahan and his team came up with a clever way to test which theory was right. They took 1,000 Americans, surveyed their political views, and then gave them a standard test used for assessing math skills. Then they presented them with a brainteaser. In its first form, it looked like this:

Medical researchers have developed a new cream for treating skin rashes. New treatments often work but sometimes make rashes worse. Even when treatments don't work, skin rashes sometimes get better and sometimes get worse on their own. As a result, it is necessary to test any new treatment in an experiment to see whether it makes the skin condition of those who use it better or worse than if they had not used it.

Researchers have conducted an experiment on patients with skin rashes. In the experiment, one group of patients used the new cream for two weeks, and a second group did not use the new cream.

In each group, the number of people whose skin condition got better and the number whose condition got worse are recorded in the table below. Because patients do not always complete studies, the total number of patients in each two groups is not exactly the same, but this does not prevent assessment of the results.

Please indicate whether the experiment shows that using the new cream is likely to make the skin condition better or worse.

What result does the study support?

- People who used the skin cream were more likely to get better than those who didn't.

- People who used the skin cream were more likely to get worse than those who didn't.

It’s a tricky problem meant to exploit a common mental shortcut. A glance at the numbers leaves most people with the impression that the skin cream improved the rash. After all, more than twice as many people who used the skin cream saw their rash improve. But if you actually calculate the ratios the truth is just the opposite: about 25 percent of the people who used the skin cream saw their rashes worsen, compared to only about 16 percent of the people who didn’t use the skin cream.

This kind of problem is used in social science experiments to test people’s abilities to slow down and consider the evidence arrayed before them. It forces subjects to suppress their impulse to go with what looks right and instead do the difficult mental work of figuring out what is right. In Kahan’s sample, most people failed. This was true for both liberals and conservatives. The exceptions, predictably, were the people who had shown themselves unusually good at math: they tended to get the problem right. These results support the Science Comprehension Thesis: the better subjects were at math, the more likely they were to stop, work through the evidence, and find the right answer.

But Kahan and his coauthors also drafted a politicized version of the problem. This version used the same numbers as the skin-cream question, but instead of being about skin creams, the narrative set-up focused on a proposal to ban people from carrying concealed handguns in public. The 2x2 box now compared crime data in the cities that banned handguns against crime data in the cities that didn’t. In some cases, the numbers, properly calculated, showed that the ban had worked to cut crime. In others, the numbers showed it had failed.

Presented with this problem a funny thing happened: how good subjects were at math stopped predicting how well they did on the test. Now it was ideology that drove the answers. Liberals were extremely good at solving the problem when doing so proved that gun-control legislation reduced crime. But when presented with the version of the problem that suggested gun control had failed, their math skills stopped mattering. They tended to get the problem wrong no matter how good they were at math. Conservatives exhibited the same pattern — just in reverse.

Being better at math didn’t just fail to help partisans converge on the right answer. It actually drove them further apart. Partisans with weak math skills were 25 percentage points likelier to get the answer right when it fit their ideology. Partisans with strong math skills were 45 percentage points likelier to get the answer right when it fit their ideology. The smarter the person is, the dumber politics can make them.

Consider how utterly insane that is: being better at math made partisans less likely to solve the problem correctly when solving the problem correctly meant betraying their political instincts. People weren’t reasoning to get the right answer; they were reasoning to get the answer that they wanted to be right.

The skin-rash experiment wasn’t the first time Kahan had shown that partisanship has a way of short-circuiting intelligence. In another study, he tested people’s scientific literacy alongside their ideology and then asked about the risks posed by climate change. If the problem was truly that people needed to know more about science to fully appreciate the dangers of a warming climate, then their concern should’ve risen alongside their knowledge. But here, too, the opposite was true: among people who were already skeptical of climate change, scientific literacy made them more skeptical of climate change.

This will make sense to anyone who’s ever read the work of a serious climate change denialist. It’s filled with facts and figures, graphs and charts, studies and citations. Much of the data is wrong or irrelevant. But it feels convincing. It’s a terrific performance of scientific inquiry. And climate-change skeptics who immerse themselves in it end up far more confident that global warming is a hoax than people who haven’t spent much time studying the issue. More information, in this context, doesn’t help skeptics discover the best evidence. Instead, it sends them searching for evidence that seems to prove them right. And in the age of the internet, such evidence is never very far away.

In another experiment Kahan and his coauthors gave out sample biographies of highly accomplished scientists alongside a summary of the results of their research. Then they asked whether the scientist was indeed an expert on the issue. It turned out that people’s actual definition of "expert" is "a credentialed person who agrees with me." For instance, when the researcher’s results underscored the dangers of climate change, people who tended to worry about climate change were 72 percentage points more likely to agree that the researcher was a bona fide expert. When the same researcher with the same credentials was attached to results that cast doubt on the dangers of global warming, people who tended to dismiss climate change were 54 percentage points more likely to see the researcher as an expert.

Kahan is quick to note that, most of the time, people are perfectly capable of being convinced by the best evidence. There’s a lot of disagreement about climate change and gun control, for instance, but almost none over whether antibiotics work, or whether the H1N1 flu is a problem, or whether heavy drinking impairs people’s ability to drive. Rather, our reasoning becomes rationalizing when we’re dealing with questions where the answers could threaten our tribe — or at least our social standing in our tribe. And in those cases, Kahan says, we’re being perfectly sensible when we fool ourselves.

Sean Hannity. Uri Schanker/WireImage

Imagine what would happen to, say, Sean Hannity if he decided tomorrow that climate change was the central threat facing the planet. Initially, his viewers would think he was joking. But soon, they’d begin calling in furiously. Some would organize boycotts of his program. Dozens, perhaps hundreds, of professional climate skeptics would begin angrily refuting Hannity’s new crusade. Many of Hannity’s friends in the conservative media world would back away from him, and some would seek advantage by denouncing him. Some of the politicians he respects would be furious at his betrayal of the cause. He would lose friendships, viewers, and money. He could ultimately lose his job. And along the way he would cause himself immense personal pain as he systematically alienated his closest political and professional allies. The world would have to update its understanding of who Sean Hannity is and what he believes, and so too would Sean Hannity. And changing your identity is a psychologically brutal process.

Kahan doesn’t find it strange that we react to threatening information by mobilizing our intellectual artillery to destroy it. He thinks it’s strange that we would expect rational people to do anything else. "Nothing any ordinary member of the public personally believes about the existence, causes, or likely consequences of global warming will affect the risk that climate changes poses to her, or to anyone or anything she cares about," Kahan writes. "However, if she forms the wrong position on climate change relative to the one that people with whom she has a close affinity — and on whose high regard and support she depends on in myriad ways in her daily life — she could suffer extremely unpleasant consequences, from shunning to the loss of employment."

Kahan calls this theory Identity-Protective Cognition: "As a way of avoiding dissonance and estrangement from valued groups, individuals subconsciously resist factual information that threatens their defining values." Elsewhere, he puts it even more pithily: "What we believe about the facts," he writes, "tells us who we are." And the most important psychological imperative most of us have in a given day is protecting our idea of who we are, and our relationships with the people we trust and love.

Anyone who has ever found themselves in an angry argument with their political or social circle will know how threatening it feels. For a lot of people, being "right" just isn’t worth picking a bitter fight with the people they care about. That’s particularly true in a place like Washington, where social circles and professional lives are often organized around people’s politics, and the boundaries of what those tribes believe are getting sharper.

In the mid-20th century, the two major political parties were ideologically diverse. Democrats in the South were often more conservative than Republicans in the North. The strange jumble in political coalitions made disagreement easier. The other party wasn’t so threatening because it included lots of people you agreed with. Today, however, the parties have sorted by ideology, and now neither the House nor the Senate has any Democrats who are more conservative than any Republicans, or vice versa. This sorting has made the tribal pull of the two parties much more powerful because the other party now exists as a clear enemy.

One consequence of this is that Washington has become a machine for making identity-protective cognition easier. Each party has its allied think tanks, its go-to experts, its favored magazines, its friendly blogs, its sympathetic pundits, its determined activists, its ideological moneymen. Both the professionals and the committed volunteers who make up the party machinery are members of social circles, Twitter worlds, Facebook groups, workplaces, and many other ecosystems that would make life very unpleasant for them if they strayed too far from the faith. And so these institutions end up employing a lot of very smart, very sincere people whose formidable intelligence makes certain that they typically stay in line. To do anything else would upend their day-to-day lives.

The problem, of course, is that these people are also affecting, and in some cases controlling, the levers of government. And this, Kahan says, is where identity-protective cognition gets dangerous. What’s sensible for individuals can be deadly for groups. "Although it is effectively costless for any individual to form a perception of climate-change risk that is wrong but culturally congenial, it is very harmful to collective welfare for individuals in aggregate to form beliefs this way," Kahan writes. The ice caps don’t care if it’s rational for us to worry about our friendships. If the world keeps warming, they’re going to melt regardless of how good our individual reasons for doing nothing are.

To spend much time with Kahan’s research is to stare into a kind of intellectual abyss. If the work of gathering evidence and reasoning through thorny, polarizing political questions is actually the process by which we trick ourselves into finding the answers we want, then what’s the right way to search for answers? How can we know the answers we come up with, no matter how well-intentioned, aren’t just more motivated cognition? How can we know the experts we’re relying on haven’t subtly biased their answers, too? How can I know that this article isn’t a form of identity protection? Kahan’s research tells us we can’t trust our own reason. How do we reason our way out of that?

The place to start, I figured, was talking to Dan Kahan. I expected a conversation with an intellectual nihilist. But Kahan doesn’t sound like a creature of the abyss. He sounds like, well, what he is: a Harvard-educated lawyer who clerked for Thurgood Marshall on the Supreme Court and now teaches at Yale Law School. He sounds like a guy who has lived his adult life excelling in institutions dedicated to the idea that men and women of learning can solve society’s hardest problems and raise its next generation of leaders. And when we spoke, he seemed uncomfortable with his findings. Unlike many academics who want to emphasize the import of their work, he seemed to want to play it down.

"We fixate on the cases where things aren’t working," he says. "The consequences can be dramatic, so it makes sense we pay attention to them. But they’re the exception. Many more things just work. They work so well that they’re almost not noticeable. What I’m trying to understand is really a pathology. I want to identify the dynamics that lead to these nonproductive debates." In fact, Kahan wants to go further than that. "The point of doing studies like this is to show how to fix the problem."

A nurse loads a syringe with a vaccine against hepatitis. Robyn Beck/AFP/Getty Images

Consider the human papillomavirus vaccine, he says. That’s become a major cultural battle in recent years with many parents insisting that the government has no right to mandate a vaccine that makes it easier for teenagers to have sex. Kahan compares the HPV debacle to the relatively smooth rollout of the hepatitis B vaccine.

"What about the hepatitis B vaccine?" he asks. "That’s also a sexually transmitted disease. It also causes cancer. It was proposed by the Centers for Disease Control as a mandatory vaccine. And during the years in which we were fighting over HPV the hepatitis B vaccine uptake was over 90 percent. So why did HPV become what it became?"

Kahan’s answer is that the science community has a crappy communications team. Actually, scratch that: Kahan doesn’t think they have any communications team at all. "We don’t have an organized science-intelligence communication brain in our society," he says. "We only have a brainstem. We don’t have people watching for controversies over things like vaccines and responding to them."

In Kahan’s telling, the HPV vaccine was a symphony of missteps. Its manufacturer, Merck, wanted it fast-tracked onto shelves. They sponsored legislative campaigns in statehouses to make it mandatory. In order to get it to customers quicker they began by approving it for girls before it was approved for boys. All this, he believes, allowed the process to be politicized, while a calmer, slower, more technocratic approach could have kept the peace. "I think it would’ve been appropriate for the FDA, in considering whether to fast-track the vaccine, to consider these science communication risks."

Kahan’s studies, depressing as they are, are also the source of his optimism: he thinks that if researchers can just develop a more evidence-based model of how people treat questions of science as questions of identity then scientists could craft a communications strategy that would avoid those pitfalls. "My hypothesis is we can use reason to identify the sources of the threats to our reason and then we can use our reason to devise methods to manage and control those processes," he says. That’s a lot of reasoning. As a concrete example, he offers the government’s approach to regulatory decisions. "There is a process in the Office of Management and Budget where every decision has to pass a cost-benefit test," he says. "Why isn’t there a process in the FDA evaluating every decision for science-communication impact?"

But when I ask him whether advocates for the HPV vaccine would really stay quiet if the FDA refused to fast-track a lifesaving treatment on grounds that a slower roll-out would be better PR, he doesn’t have much of an answer. Indeed, pressing him on these questions makes me wonder whether Kahan isn’t engaged in a bit of identity-protective cognition of his own. Having helped uncover a powerful mental process that undermines the institutions he’s most devoted to, he’s rationalized the problem away as a mere artifact of a poor communications strategy.

Kahan’s answers also take as a premise that scientists play a very powerful role in driving the public discourse. That is, to say the least, debatable. "If you taught everyone who cares about science to communicate properly you still couldn’t control Fox News," says Chris Mooney, a Mother Jones writer who focuses on the intersection between science and politics. "And that matters more than how individual scientists communicate."

But Kahan would never deny that identity-protective cognition afflicts him too. In fact, recognizing that is core to his strategy of avoiding it. "I’m positive that at any given moment some fraction of the things I believe, I believe for identity-protective purposes," he says. "That gives you a kind of humility."

Recognizing the problem is not the same as fixing it, though. I asked Kahan how he tries to guard against identity protection in his everyday life. The answer, he said, is to try to find disagreement that doesn’t threaten you and your social group — and one way to do that is to consciously seek it out in your group. "I try to find people who I actually think are like me — people I’d like to hang out with — but they don’t believe the things that everyone else like me believes," he says. "If I find some people I identify with, I don’t find them as threatening when they disagree with me." It’s good advice, but it requires, as a prerequisite, a desire to expose yourself to uncomfortable evidence — and a confidence that the knowledge won’t hurt you.

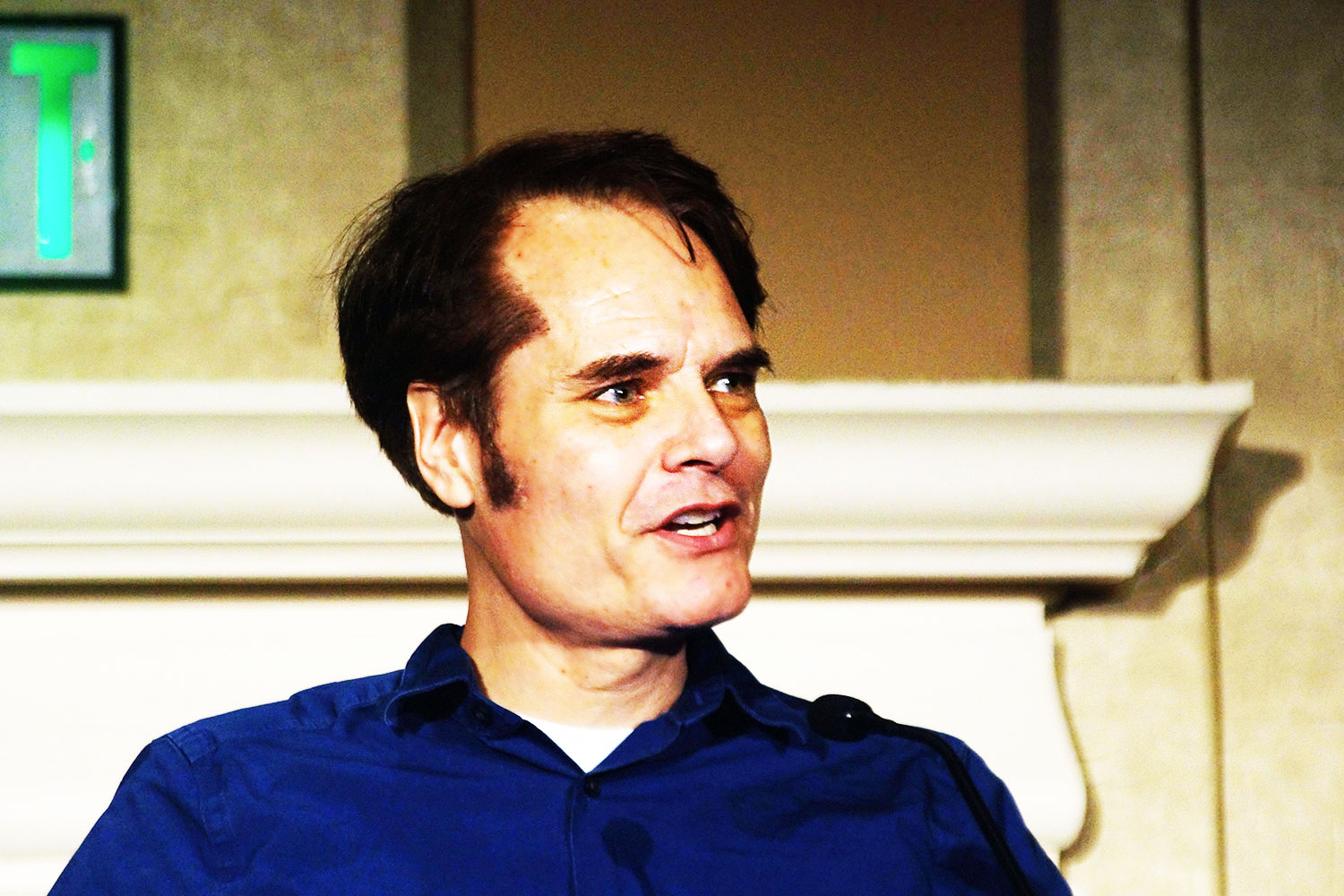

(Dan Kahan. Wikimedia Commons)

At one point in our interview Kahan does stare over the abyss, if only for a moment. He recalls a dissent written by Supreme Court Justice Antonin Scalia in a case about overcrowding in California prisons. Scalia dismissed the evidentiary findings of a lower court as motivated by policy preferences. "I find it really demoralizing, but I think some people just view empirical evidence as a kind of device," Kahan says.

But Scalia’s comments were perfectly predictable given everything Kahan had found. Scalia is a highly ideological, tremendously intelligent individual with a very strong attachment to conservative politics. He’s the kind of identity-protector who has publicly said he stopped subscribing to the Washington Post because he "just couldn’t handle it anymore," and so he now cocoons himself in the more congenial pages of the Washington Times and the Wall Street Journal. Isn’t it the case, I asked Kahan, that everything he’s found would predict that Scalia would convince himself of whatever he needed to think to get to the answers he wanted?

The question seemed to rattle Kahan a bit. "The conditions that make a person subject to that way of looking at the evidence," he said slowly, "are things that should be viewed as really terrifying, threatening influences in American life. That’s what threatens the possibility of having democratic politics enlightened by evidence."

The threat is real. Washington is a bitter war between two well-funded, sharply-defined tribes that have their own machines for generating evidence and their own enforcers of orthodoxy. It’s a perfect storm for making smart people very stupid.

The silver lining is that politics doesn’t just take place in Washington. The point of politics is policy. And most people don’t experience policy as a political argument. They experience it as a tax bill, or a health insurance card, or a deployment. And, ultimately, there’s no spin effective enough to persuade Americans to ignore a cratering economy, or skyrocketing health-care costs, or a failing war. A political movement that fools itself into crafting national policy based on bad evidence is a political movement that will, sooner or later, face a reckoning at the polls.

At least, that’s the hope. But that’s not true on issues, like climate change, where action is needed quickly to prevent a disaster that will happen slowly. There, the reckoning will be for future generations to face. And it’s not true when American politics becomes so warped by gerrymandering, big money, and congressional dysfunction that voters can’t figure out who to blame for the state of the country. If American politics is going to improve, it will be better structures, not better arguments, that win the day.

No comments:

Post a Comment