The Backfire Effect: The Psychology of Why We Have a Hard Time Changing Our Minds

by Maria Popova

How the disconnect between information and insight explains our dangerous self-righteousness.

“Allow yourself the uncomfortable luxury of changing your mind,” I wrote in reflecting on the 7 most important things I learned in 7 years of Brain Pickings. It’s a conundrum most of us grapple with — on the one hand, the awareness that personal growth means transcending our smaller selves as we reach for a more dimensional, intelligent, and enlightened understanding of the world, and on the other hand, the excruciating growing pains of evolving or completely abandoning our former, more inferior beliefs as we integrate new knowledge and insight into our comprehension of how life works. That discomfort, in fact, can be so intolerable that we often go to great lengths to disguise or deny our changing beliefs by paying less attention to information that contradicts our present conviction and more to that which confirms them. In other words, we fail the fifth tenet of Carl Sagan’s timelessly brilliant and necessary Baloney Detection Kit for critical thinking: “Try not to get overly attached to a hypothesis just because it’s yours.”

“Allow yourself the uncomfortable luxury of changing your mind,” I wrote in reflecting on the 7 most important things I learned in 7 years of Brain Pickings. It’s a conundrum most of us grapple with — on the one hand, the awareness that personal growth means transcending our smaller selves as we reach for a more dimensional, intelligent, and enlightened understanding of the world, and on the other hand, the excruciating growing pains of evolving or completely abandoning our former, more inferior beliefs as we integrate new knowledge and insight into our comprehension of how life works. That discomfort, in fact, can be so intolerable that we often go to great lengths to disguise or deny our changing beliefs by paying less attention to information that contradicts our present conviction and more to that which confirms them. In other words, we fail the fifth tenet of Carl Sagan’s timelessly brilliant and necessary Baloney Detection Kit for critical thinking: “Try not to get overly attached to a hypothesis just because it’s yours.”

That humbling human tendency is known as the backfire effect and is among the seventeen psychological phenomena David McRaney explores in You Are Now Less Dumb: How to Conquer Mob Mentality, How to Buy Happiness, and All the Other Ways to Outsmart Yourself (public library) — a fascinating and pleasantly uncomfortable-making look at why “self-delusion is as much a part of the human condition as fingers and toes,” and the follow-up to McRaney’s You Are Not So Smart, one of the best psychology books of 2011. McRaney writes of this cognitive bug:

Once something is added to your collection of beliefs, you protect it from harm. You do this instinctively and unconsciously when confronted with attitude-inconsistent information. Just as confirmation bias shields you when you actively seek information, the backfire effect defends you when the information seeks you, when it blindsides you. Coming or going, you stick to your beliefs instead of questioning them. When someone tries to correct you, tries to dilute your misconceptions, it backfires and strengthens those misconceptions instead. Over time, the backfire effect makes you less skeptical of those things that allow you to continue seeing your beliefs and attitudes as true and proper.

But what makes this especially worrisome is that in the process of exerting effort on dealing with the cognitive dissonance produced by conflicting evidence, we actually end up building new memories and new neural connections that further strengthen our original convictions. This helps explain such gobsmacking statistics as the fact that, despite towering evidence proving otherwise, 40% of Americans don’t believe the world is more than 6,000 years old. The backfire effect, McRaney points out, is also the lifeblood of conspiracy theories. He cites the famous neurologist and conspiracy-debunker Steven Novella, who argues believers see contradictory evidence is as part of the conspiracy and dismiss lack of confirming evidence as part of the cover-up, thus only digging their heels deeper into their position the more counter-evidence they’re presented with.

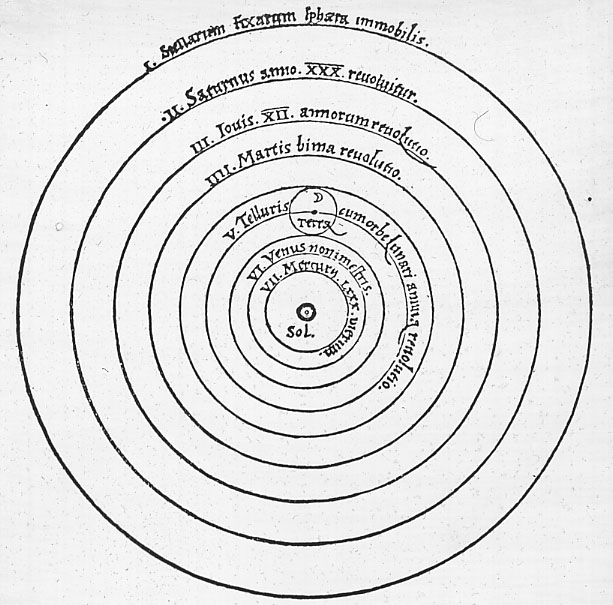

Nicolaus Copernicus's simple yet revolutionary 1543 heliocentric model, which placed the sun rather than Earth at the center of the universe, contradicted the views of the Catholic Church. In 1633, Galileo was detained under house arrest for the remainder of his life for supporting Copernicus's model.

On the internet, a giant filter bubble of our existing beliefs, this can run even more rampant — we see such horrible strains of misinformation as climate change denial and antivaccination activism gather momentum by selectively seeking out “evidence” while dismissing the fact that every reputable scientist in the world disagrees with such beliefs. (In fact, the epidemic of misinformation has reached such height that we’re now facing a resurgence of once-eradicated diseases.)

McRaney points out that, despite Daniel Dennett’s rules for criticizing intelligently and arguing with kindness, this makes it nearly impossible to win an argument online:

When you start to pull out facts and figures, hyperlinks and quotes, you are actually making the opponent feel even surer of his position than before you started the debate. As he matches your fervor, the same thing happens in your skull. The backfire effect pushes both of you deeper into your original beliefs.

This also explains why Benjamin Franklin’s strategy for handling haters, which McRaney also explores in the book, is particularly effective, and reminds us that this fantastic 1866 guide to the art of conversation still holds true in its counsel:“In disputes upon moral or scientific points, ever let your aim be to come at truth, not to conquer your opponent. So you never shall be at a loss in losing the argument, and gaining a new discovery.”

McRaney points out that the backfire effect is due in large part to our cognitive laziness — our minds simply prefer explanations that take less effort to process, and consolidating conflicting facts with our existing beliefs is enormously straining:

The more difficult it becomes to process a series of statements, the less credit you give them overall. During metacognition, the process of thinking about your own thinking, if you take a step back and notice that one way of looking at an argument is much easier than another, you will tend to prefer the easier way to process information and then leap to the conclusion that it is also more likely to be correct. In experiments where two facts were placed side by side, subjects tended to rate statements as more likely to be true when those statements were presented in simple, legible type than when printed in a weird font with a difficult-to-read color pattern. Similarly, a barrage of counterarguments taking up a full page seems to be less persuasive to a naysayer than a single, simple, powerful statement.

In 1968, shortly after the introduction of the groundbreaking oral contraceptive pill that would revolutionize reproductive rights for generations of women, the Roman Catholic Church declared that the pill distorted the nature and purpose of intercourse. (Public domain photograph via Nationaal Archief)

One particularly pernicious manifestation of this is how we react to critics versus supporters — the phenomenon wherein, as the popular saying goes, our minds become “teflon for positive and velcro for negative.” McRaney traces the crushing psychological effect of trolling — something that takes an active effort to fight — back to its evolutionary roots:

Have you ever noticed the peculiar tendency you have to let praise pass through you, but to feel crushed by criticism? A thousand positive remarks can slip by unnoticed, but one “you suck” can linger in your head for days. One hypothesis as to why this and the backfire effect happen is that you spend much more time considering information you disagree with than you do information you accept. Information that lines up with what you already believe passes through the mind like a vapor, but when you come across something that threatens your beliefs, something that conflicts with your preconceived notions of how the world works, you seize up and take notice. Some psychologists speculate there is an evolutionary explanation. Your ancestors paid more attention and spent more time thinking about negative stimuli than positive because bad things required a response. Those who failed to address negative stimuli failed to keep breathing.

This process is known as biased assimilation and is something neuroscientists have also demonstrated. McRaney cites the work of Kevin Dunbar, who put subjects in an fMRI and showed them information confirming their beliefs about a specific subject, which led brain areas associated with learning to light up. But when faced with contradictory information, those areas didn’t fire — instead, parts associated with thought suppression and effortful thinking lit up. In other words, simply presenting people with information does nothing in the way of helping them internalize it and change their beliefs accordingly.

So where does this leave us? Perhaps a little humbled by our own fallible humanity, and a little more motivated to use tools like Sagan’s Baloney Detection Kit as vital weapons of self-defense against the aggressive self-righteousness of our own minds. After all, Daniel Dennett was right in more ways than one when he wrote, “The chief trick to making good mistakes is not to hide them — especially not from yourself.”

The remainder of You Are Now Less Dumb is just as wonderfully, if uncomfortably, illuminating. Sample it further with the psychology of the Benjamin Franklin Effect, and treat yourself to McRaney’s excellent podcast,You Are Not So Smart, which will, of course, make you smarter.

No comments:

Post a Comment